One-shot pruning of gated recurrent unit neural network by sensitivity for time-series prediction

Abstract

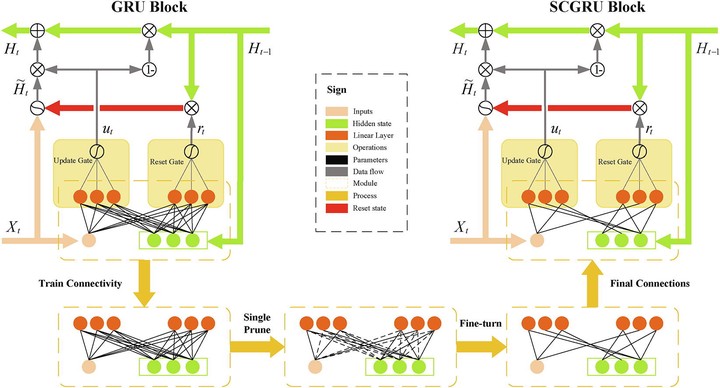

Although deep learning models have been successfully adopted in many applications, they are facing challenges to be deployed on energy-limited devices (e.g., some mobile devices, etc.) due to their high computation complexity. In this paper, we focus on reducing the costs of Gated Recurrent Units (GRUs) for time-series prediction tasks and we propose a new pruning method that can recognize and remove the neural connections that have little influence on the network loss, using a controllable threshold on the absolute value of the pre-trained GRU weights. This is different from existing approaches which usually try to find and preserve the connections with large weight values. We further propose a sparse-connection GRU model (SCGRU) that only needs a one-time pruning (with fine-tuning), rather than using multiple prune-retrain cycles. A large number of experimental results demonstrate that the proposed method is able to largely reduce the storage and computation costs while achieving the state-of-arts performance in two datasets.