Liangzhi Li (李良知)

Co-Founder & CTO

Climind

VP and Chief Scientist

Xiamen Meet You Co., Ltd

Professor

Qufu Normal University

Biography

Liangzhi Li concurrently serves as Chief Scientist at Xiamen Meet You Co., Ltd. (美柚), Co-Founder & CTO of Climind, and Professor in the Department of Computer Science at Qufu Normal University (曲阜师范大学). Previously, he was an Assistant Professor at Osaka University (大阪大学) from 2021 to 2023, following a postdoctoral tenure there from 2019 to 2021.

He earned his Ph.D. in Engineering from the Muroran Institute of Technology (室蘭工業大学), Japan, in 2019, after completing both his B.S. and M.S. degrees in Computer Science at South China University of Technology (华南理工大学, SCUT) in 2012 and 2016, respectively.

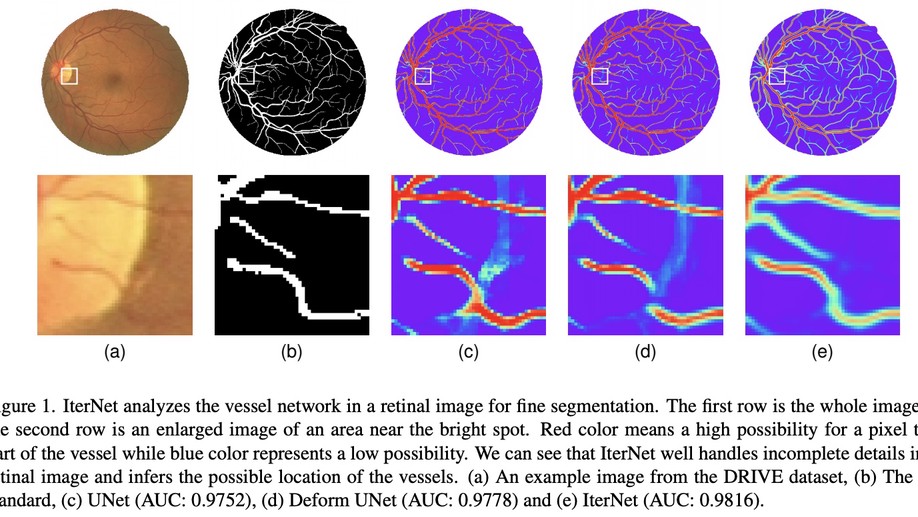

His research interests encompass computer vision, explainable AI, large language models (LLMs), and medical imaging. His academic accomplishments have garnered recognition through programs such as the Shandong Taishan Scholar Young Expert (泰山学者青年专家) and the Shandong Provincial Overseas Excellent Young Scholars (山东省海外优青).

By bridging industry and academia, he remains committed to fostering technological innovation, advancing artificial intelligence research, and contributing to the educational sphere.